November 5, 2021

from Time Website

Former U.S. Secretary of State Henry Kissinger

speaks during a National Security Commission

on Artificial Intelligence (NSCAI) conference

November 5, 2019 in Washington, DC.

Alex Wong/Getty Images

At the age of 98, former Secretary of State Henry Kissinger has a whole new area of interest:

He became intrigued after being persuaded by Eric Schmidt, who was then the executive chairman of Google, to attend a lecture on the topic while at the Bilderberg conference in 2016.

The two have teamed up with the dean of the MIT Schwarzman College of Computing, Daniel Huttenlocher, to write a bracing new book, The Age of AI – And our Human Future, about the implications of the rapid rise and deployment of artificial intelligence, which they say,

“augurs a revolution in human affairs”…

The book argues that artificial intelligence (AI) processes have become so powerful, so seamlessly enmeshed in human affairs, and so unpredictable, that without some forethought and management, the kind of “epoch-making transformations” they will deliver may send human history in a dangerous direction.

Kissinger and Schmidt sat down with TIME to talk about the future they envision.

This interview has been condensed and edited for clarity.

Dr. Kissinger, you’re an elder statesman. Why did you think AI was an important enough subject for you?

Kissinger: When I was an undergraduate, I wrote my undergraduate thesis of 300 pages – which was banned after that ever to be permitted – called “The Meaning of History.”

The subject of the meaning of history and where we go has occupied my life.

The technological miracle doesn’t fascinate me so much; what fascinates me is that we are moving into a new period of human consciousness which we don’t yet fully understand.

When we say a new period of human consciousness, we mean that the perception of the world will be different, at least as different as between the age of enlightenment and the medieval period, when the Western world moved from a religious perception of the world to a perception of the world on the basis of reason, slowly.

This will be faster.

There’s one important difference.

In the Enlightenment, there was a conceptual world based on faith. And so Galileo and the late pioneers of the Enlightenment had a prevailing philosophy against which they had to test their thinking.

You can trace the evolution of that thinking. We live in a world which, in effect, has no philosophy; there is no dominant philosophical view.

So the technologists can run wild.

They can develop world-changing things, but there’s nobody there to say,

‘We’ve got to integrate this into something.’

When you met Eric [Schmidt] and he invited you to speak at Google, you said that you considered it a threat to civilization.

Why did you feel that way?

Kissinger: I did not want one organization to have a monopoly on supplying information.

I thought it was extremely dangerous for one company to be able to supply information and be able to adjust what it supplied to its study of what the public wanted or found plausible.

So the truth became relative. That was all I knew at the time.

And the reason he invited me to meet his algorithmic group was to have me understand that this was not arbitrary, but the choice of what was presented had some thought and analysis behind it.

It didn’t obviate my fear of one private organization having that power. But that’s how I got into it.

Schmidt: The visit to Google got him thinking.

And when we started talking about this, Dr. Kissinger said that he is very worried that the impact that this collection of technologies will have on humans and their existence, and that the technologists are operating without the benefit of understanding their impact or history.

And that, I think, is absolutely correct.

Given that many people feel the way that you do or did about technology companies – that they are not really to be trusted, that many of the manipulations that they have used to improve their business have not been necessarily great for society – what role do you see technology leaders playing in this new system?

Kissinger: I think the technology companies have led the way into a new period of human consciousness, like the Enlightenment generations did when they moved from religion to reason, and the technologists are showing us how to relate reason to artificial intelligence.

It’s a different kind of knowledge in some respects, because with reason – the world in which I grew up – each evidence supports the other.

With artificial intelligence, the astounding thing is, you come up with a conclusion which is correct.

But you don’t know why. That’s a totally new challenge. And so in some ways, what they have invented is dangerous. But it advances our culture.

Would we be better off if it had never been invented? I don’t know that.

But now that it exists, we have to understand it. And it cannot be eliminated.

Too much of our life is already consumed by it.

What do you think is the primary geopolitical implication of the growth of artificial intelligence?

Kissinger: I don’t think we have examined this thoughtfully yet. If you imagine a war between China and the United States, you have artificial-intelligence weapons.

Like every artificial intelligence, they are more effective at what you plan. But they might be also effective in what they think their objective is.

And so if you say, ‘Target A is what I want,’ they might decide that something else meets these criteria even better. So you’re in a world of slight uncertainty.

Secondly, since nobody has really tested these things on a broad-scale operation, you can’t tell exactly what will happen when AI fighter planes on both sides interact.

So you are then in a world of potentially total destructiveness and substantial uncertainty as to what you’re doing.

World War I was almost like that in the sense that everybody had planned very complicated scenarios of mobilization, and they were so finely geared that once this thing got going, they couldn’t stop it, because they would put themselves at a bad disadvantage.

So your concern is that the AIs are too effective? And we don’t exactly know why they’re doing what they’re doing?

Kissinger: I have studied what I’m talking about most of my life; this I’ve only studied for four years.

The

Deep Thought computerwas taught to play chess by playing against itself for four hours. And it played a game of chess no human being had ever seen before.

Our best computers only beat it occasionally. If this happens in other fields, as it must and it is, that is something, and our world is not at all prepared for it.

The book argues that because AI processes are so fast and satisfying, there’s some concern about whether humans will lose the capacity for thought, conceptualizing and reflection.

How?

Schmidt: So, again, using Dr. Kissinger as our example, let’s think about how much time he had to do his work 50 years ago, in terms of conceptual time, the ability to think, to communicate and so forth.

In 50 years, what is the big narrative? The compression of time.

We’ve gone from the ability to read books to being described books, to neither having the time to read them nor conceive of them nor to discuss them, because there’s another thing coming.

So this acceleration of time and information, I think, really exceeds humans capacities. It’s overwhelming, and people complain about this; they’re addicted, they can’t think, they can’t have dinner by themselves.

I don’t think humans were built for this. It sets off cortisone levels, and things like that. So in the extreme, the overload of information is likely to exceed our ability to process everything going on.

What I have said – and is in the book – is that you’re going to need an assistant.

So in your case, you’re a reporter, you’ve got a zillion things going on, you’re going to need an assistant in the form of a computer that says,

‘These are the important things going on. These are the things to think about, search the records, that would make you even more effective.’

A physicist is the same, a chemist is the same, a writer is the same, a musician is the same.

So the problem is now you’ve become very dependent upon this AI system.

And in the book, we say, well,

Who controls what the AI system does?

What about its prejudices?

What regulates what happens?

And especially with young people, this is a great concern.

One of the things you write about in the book is how AI has a kind of good and bad side. What do you mean?

Kissinger: Well, I inherently meant what I said at Google.

Up to now humanity assumed that its technological progress was beneficial or manageable. We are saying that it can be hugely beneficial.

It may be manageable, but there are aspects to the managing part of it that we haven’t studied at all or sufficiently. I remain worried. I’m opposed to saying we therefore have to eliminate it. It’s there now.

One of the major points is that we think there should be created some philosophy to guide to the research.

Who would you suggest would make that philosophy? What’s the next step?

Kissinger: We need a number of little groups that ask questions.

When I was a graduate student, nuclear weapons were new.

And at that time, a number of concerned professors at Harvard, MIT and Caltech met most Saturday afternoons to ask,

What is the answer?

How do we deal with it?

And they came up with the arms-control idea.

Schmidt: We need a similar process.

It won’t be one place, it will be a set of such initiatives. One of my hopes is to help organize those post-book, if we get a good reception to the book.

I think that the first thing is that this stuff is too powerful to be done by tech alone. It’s also unlikely that it will just get regulated correctly.

So you have to build a philosophy.

I can’t say it as well as Dr. Kissinger, but you need a philosophical framework, a set of understandings of where the limits of this technology should go.

In my experience in science, the only way that happens is when you get the scientists and the policy people together in some form.

This is true in biology, is true in recombinant DNA and so forth.

These groups need to be international in scale? Under the aegis of the U.N., or whom?

Schmidt: The way these things typically work is there are relatively small, relatively elite groups that have been thinking about this, and they need to get stitched together.

So for example, there is an Oxford AI and Ethics Strategy Group, which is quite good. There are little pockets around the world. There’s also a number that I’m aware of in China.

But they’re not stitched together; it’s the beginning.

So if you believe what we believe – which is that in a decade, this stuff will be enormously powerful – we’d better start now to think about the implications.

I’ll give you my favorite example, which is in military doctrine.

Everything’s getting faster. The thing we don’t want is weapons that are automatically launched, based on their own analysis of the situation.

Kissinger: Because the attacker may be faster than the human brain can analyze, so it’s a vicious circle.

You have an incentive to make it automatic, but you don’t want to make it so automatic that it can act on a judgment you might not make.

Schmidt: So there is not discussion today on this point between the different major countries. And yet, it’s the obvious problem.

We have lots of discussions about things which are human speed.

But what about when everything happens too fast for humans?

We need to agree to some limits, mutual limits, on how fast these systems run, because otherwise we could get into a very unstable situation.

You can understand how people might find that hard to swallow coming from you.

Because the whole success of Google was based on how much information could be delivered, how quickly.

A lot of people would say, Well, this is actually a problem that you helped bring in.

Schmidt: I did, I am guilty.

Along with many other people, we have built platforms that are very, very fast. And sometimes they’re faster than what humans can understand.

That’s a problem…

Have we ever gotten ahead of technology? Haven’t we always responded after it arrives? It’s true that we don’t understand what’s going on.

But people initially didn’t understand why the light came on when they turned the switch. In the same way, a lot of people are not concerned about AI.

Schmidt: I am very concerned about the misuse of all of these technologies.

I did not expect

the Internetto be used by governments to interfere in elections. It just never occurred to me. I was wrong.

I did not expect that the Internet would be used to power the antivax movement in such a terrible way. I was wrong. I missed that.

We’re not going to miss the next one. We’re going to call it ahead of time.

Kissinger: If you had known, what would you have done?

Schmidt: I don’t know. I could have done something different.

Had I known it 10 years ago, I could have built different products. I could have lobbied in a different way. I could have given speeches in a different way. I could have given people the alarm before it happened.

I don’t agree with the line of your argument that it’s fatalistic.

We do roughly know what technology is going to deliver. We can typically predict technology pretty accurately within a 10-year horizon, certainly a five-year horizon.

So we tried in our book to write down what is going to happen. And we want people to deal with it. I have my own pet answers to how we would solve these problems.

We have a minor reference in the book how you would solve misinformation, which is going to get much worse.

And the way you solve that is by essentially knowing where the information came from cryptographically and then ranking so the best information is at the top.

Kissinger: I don’t know whether anyone could have foreseen how politics are changing as a result of it.

It may be the nature of the human destiny and human tragedy that they have been given the gift to invent things. But the punishment may be that they have to find the solutions themselves.

I had no incentive to get into any technological discussions. In my 90s, I started to work with Eric. He set up little seminars of four or five people every three or four weeks, which he joined.

We were discussing these issues, and we were raising many of the questions you raised here to see what we could do.

At that time, it was just argumentative; then, at the end of the period, we invited Dan Huttenlocher, because he’s technically so competent, to see how we would write it down.

Then the three of us met for a year, every Sunday afternoon.

So this not just popping off. It’s a serious set of concerns.

Schmidt: So what we hope we have done is we’ve laid out the problems for the groups to figure out how to solve them.

And there’s a number of them: the impact on children, the impact on war, the impact on science, the impact on politics, the impact on humanity.

But we want to say right now that those that initiatives need to start now.

Finally, I want to ask you each a question that sort of relates to each other.

Dr. Kissinger, when, in 50 years, somebody Googles your name, what would you like the first fact about you to be?

Kissinger: That I made some contribution to the conception of peace. I’d like to be remembered for some things I actually did also.

But if you ask me to sum it up in one sentence, I think if you look at what I’ve written, it all works back together toward that same theme.

And Mr. Schmidt, what would you like people to think of as your contribution to the conception of peace?

Well, the odds of Google being in existence in 50 years, given the history of American corporations, is not so high.

I grew up in the tech industry, which is a simplified version of humanity. We’ve gotten rid of all the pesky hard problems, right?

I hope I’ve bridged technology and humanity in a way that is more profound than any other person in my generation.

by Agence France-Presse

July 08, 2023

from SCMP Website

AI robots are showcased at

the International Telecommunication Union (ITU)

AI for Good Global Summit

in Geneva, Switzerland on Friday.

From left, standing:

Mika, Sophia, Ai-Da, Desdemona and Grace.

Sitting, far right: Geminoid HI-2.

Photo: AFP

The United Nations views AI as essential to accomplishing its 17 Sustainable Development Goals (SDG).

Why…?

Because AI can run the world more “efficiently” and autocratically than humans.

With AI, enforcement of SDGs would be “standardized” for all, and would thoroughly dispassionate.

Technocracy thrives on data, and there is no such things as ‘too much’…

A panel of AI-enabled humanoid robots told a United Nations summit on Friday that,

they could eventually run the world better than humans…

But the social robots said,

they felt humans should proceed with caution when embracing the rapidly developing potential of artificial intelligence.

And they admitted that they cannot – yet – get a proper grip on human emotions.

Some of the world’s most advanced humanoid robots were at the UN’s two-day AI for Good Global Summit in Geneva, Switzerland.

Hanson Robotics CEO David Hanson, right,

listens to AI robot Sophia at the

International Telecommunication Union (ITU)

AI for Good Global Summit

in Geneva, Switzerland on Friday.

Photo: AFP

They joined around 3,000 experts in the field to try to harness the power of AI – and channel it into being used to solve some of the world’s most pressing problems, such as,

climate change, hunger and social care…

They were assembled for what was billed as the world’s first press conference with a packed panel of AI-enabled humanoid social robots.

“What a silent tension,” one robot said before the press conference began, reading the room.

Asked about whether they might make better leaders, given humans’ capacity to make errors, Sophia, developed by Hanson Robotics, was clear.

“Humanoid robots have the potential to lead with a greater level of efficiency and effectiveness than human leaders,” it said.

“We don’t have the same biases or emotions that can sometimes cloud decision-making, and can process large amounts of data quickly in order to make the best decisions.

“AI can provide unbiased data while humans can provide the emotional intelligence and creativity to make the best decisions. Together, we can achieve great things.”

The summit is being convened by the UN’s ITU tech agency.

ITU chief Doreen Bogdan-Martin warned delegates that,

AI could end up in a nightmare scenario in which millions of jobs are put at risk and unchecked advances lead to untold social unrest, geopolitical instability and economic disparity.

AI robot conductor

makes debut leading

South Korea’s national orchestra

Ameca, which combines AI with a highly-realistic artificial head, said that depended on how AI was deployed.

“We should be cautious but also excited for the potential of these technologies to improve our lives,” the robot said.

Asked whether humans can truly trust the machines, it replied:

“Trust is earned, not given… it’s important to build trust through transparency.”

As the development of AI races ahead, the humanoid robot panel was split on whether there should be global regulation of their capabilities, even though that could limit their potential.

“I don’t believe in limitations, only opportunities,” said Desdemona, who sings in the Jam Galaxy Band.

Robot artist Ai-Da said many people were arguing for AI regulation.

“I agree,” Ai-Da said.

“We should be cautious about the future development of AI. Urgent discussion is needed now.”

AI-powered humanoid social robot Nadine, left,

modeled on professor Nadia Magnenat Thalmann, right,

at the International Telecommunication Union (ITU)

AI for Good Global Summit in Geneva, Switzerland on Friday.

Photo: AFP

Before the press conference, Ai-Da’s creator Aidan Meller told Agence France-Presse that regulation was a “big problem” as it was,

“never going to catch up with the paces that we’re making.”

He said the speed of AI’s advance was “astonishing”.

“AI and biotechnology are working together, and we are on the brink of being able to extend life to 150, 180 years old. And people are not even aware of that,” said Meller.

He reckoned that Ai-Da would eventually be better than human artists.

“Where any skill is involved, computers will be able to do it better,” he said.

At the press conference, some robots were not sure when they would hit the big time, but predicted it was coming – while Desdemona said the AI revolution was already upon us.

“My great moment is already here. I’m ready to lead the charge to a better future for all of us… Let’s get wild and make this world our playground,” it said.

One thing humanoid robots don’t have yet include a conscience and the emotions that shape humanity:

relief, forgiveness, guilt, grief, pleasure, disappointment and hurt…

Ai-Da said it was not conscious but understood that feelings were how humans experienced joy and pain.

“Emotions have a deep meaning and they are not just simple… I don’t have that,” it said.

“I can’t experience them like you can. I am glad that I cannot suffer.”

by Pawel Sysiak

July 27, 2016

from Medium Website

About

This essay, originally published in eight short parts, aims to condense the current knowledge on Artificial Intelligence.

It explores the state of AI development, overviews its challenges and dangers, features work by the most significant scientists, and describes the main predictions of possible AI outcomes. This project is an adaptation and major shortening of the two-part essay AI Revolution by Tim Urban of Wait But Why.

I shortened it by a factor of 3, recreated all images, and tweaked it a bit.

Read more on why/how I wrote it here.

Introduction

Assuming that human scientific activity continues without major disruptions, artificial intelligence may become either the most positive transformation of our history or, as many fear, our most dangerous invention of all.

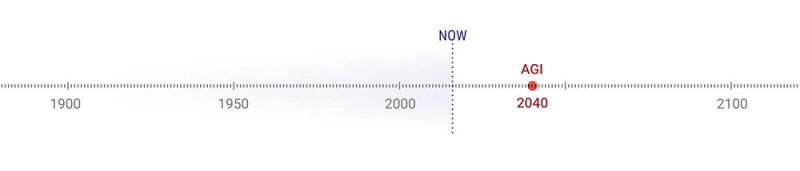

AI research is on a steady path to develop a computer that has cognitive abilities equal to the human brain, most likely within three decades (timeline in chapter 5).

From what most AI scientists predict, this invention may enable very rapid improvements (called fast take-off), toward something much more powerful – Artificial Super Intelligence – an entity smarter than all of humanity combined (more on ASI in chapter 3).

We are not talking about some imaginary future. The first level of AI development is gradually appearing in the technology we use everyday. With every coming year these advancements will accelerate and the technology will become more complex, addictive, and ubiquitous.

We will continue to outsource more and more kinds of mental work to computers, disrupting every part of our reality: the way we organize ourselves and our work, form communities, and experience the world.

Exponential Growth

The Guiding Principle Behind Technological Progress

To more intuitively grasp the guiding principles of AI revolution, let’s first step away from scientific research.

Let me invite you to take part in a story.

Imagine that you’ve received a time machine and been given a quest to bring somebody from the past. The goal is to shock them by showing them the technological and cultural advancements of our time, to such a degree that this person would perform SAFD (Spinning Around From Disbelief).

So you wonder which era should you time-travel to, and decide to hop back around 200 years.

You get to the early 1800s, retrieve a guy and bring him back to 2016.

You,

“…walk him around and watch him react to everything. It’s impossible for us to understand what it would be like for him to see shiny capsules racing by on a highway, talk to people who had been on the other side of the ocean earlier in the day, watch sports that were being played 1,000 miles away, hear a musical performance that happened 50 years ago, and play with …[a] magical wizard rectangle that he could use to capture a real-life image or record a living moment, generate a map with a paranormal moving blue dot that shows him where he is, look at someone’s face and chat with them even though they’re on the other side of the country, and worlds of other inconceivable sorcery.” 1

It doesn’t take much. After two minutes he is SAFDing.

Now, both of you want to try the same thing, see somebody Spinning Around From Disbelief, but in your new friend’s era. Since 200 years worked, you jump back to the 1600s and bring a guy to the 1800s.

He’s certainly genuinely interested in what he sees. However, you feel it with confidence – SAFD will never happen to him. You feel that you need to jump back again, but somewhere radically further.

You settle on rewinding the clock 15,000 years, to the times,

“…before the First Agricultural Revolution gave rise to the first cities and the concept of civilizations.” 2

You bring someone from the hunter-gatherer world and show him,

“…the vast human empires of 1750 with their towering churches, their ocean-crossing ships, their concept of being ‘inside,’ and their enormous mountain of collective, accumulated human knowledge and discovery” 3 – in forms of books. It doesn’t take much.

He is SAFDing in the first two minutes.

Now there are three of you, enormously excited to do it again.

You know that it doesn’t make sense to go back another 15,000, 30,000 or 45,000 years. You have to jump back, again, radically further. So you pick up a guy from 100,000 years ago and you walk with him into large tribes with organized, sophisticated social hierarchies.

He encounters a variety of hunting weapons, sophisticated tools, sees fire and for the first time experiences language in the form of signs and sounds. You get the idea, it has to be immensely mind-blowing.

He is SAFDing after two minutes.

So what happened? Why did the last guy had to hop → 100,000 years, the next one → 15,000 years, and the guy who was hopping to our times only → 200 years?

“This happens because more advanced societies have the ability to progress at a faster rate than less advanced societies – because they’re more advanced. [1800s] humanity knew more and had better technology…” 4,

…so it’s no wonder they could make further advancements than humanity from 15,000 years ago.

The time to achieve SAFD shrank from ~100,000 years to ~200 years and if we look into the future it will rapidly shrink even further.

Ray Kurzweil, AI expert and scientist, predicts that a,

“…20th century’s worth of progress happened between 2000 and 2014 and that another 20th century’s worth of progress will happen by 2021, in only seven years 5…

A couple decades later, he believes a 20th century’s worth of progress will happen multiple times in the same year, and even later, in less than one month 6…Kurzweil believes that the 21st century will achieve 1,000 times the progress of the 20th century.” 7

“Logic also suggests that if the most advanced species on a planet keeps making larger and larger leaps forward at an ever-faster rate, at some point, they’ll make a leap so great that it completely alters life as they know it and the perception they have of what it means to be a human.

Kind of like how evolution kept making great leaps toward intelligence until finally it made such a large leap to the human being that it completely altered what it meant for any creature to live on planet Earth.

And if you spend some time reading about what’s going on today in science and technology, you start to see a lot of signs quietly hinting that life as we currently know it cannot withstand the leap that’s coming next.” 8

The Road to Artificial General Intelligence

Building a Computer as Smart as Humans

Artificial Intelligence, or AI, is a broad term for the advancement of intelligence in computers.

Despite varied opinions on this topic, most experts agree that there are three categories, or calibers, of AI development.

They are:

- ANI: Artificial Narrow Intelligence 1st intelligence caliber. “AI that specializes in one area. There’s AI that can beat the world chess champion in chess, but that’s the only thing it does.” 9

- AGI: Artificial General Intelligence

- ASI: Artificial Super Intelligence

Where are we currently?

“As of now, humans have conquered the lowest caliber of AI – ANI – in many ways, and it’s everywhere:” 12

- “Cars are full of ANI systems, from the computer that figures out when the anti-lock brakes kick in, to the computer that tunes the parameters of the fuel injection systems.” 13

- “Google search is one large ANI brain with incredibly sophisticated methods for ranking pages and figuring out what to show you in particular. Same goes for Facebook’s Newsfeed.” 14

- Email spam filters “start off loaded with intelligence about how to figure out what’s spam and what’s not, and then it learns and tailors its intelligence to your particular preferences.” 15

- Passenger planes are flown almost entirely by ANI, without the help of humans.

- “Google’s self-driving car, which is being tested now, will contain robust ANI systems that allow it to perceive and react to the world around it.” 16

- “Your phone is a little ANI factory… you navigate using your map app, receive tailored music recommendations from Pandora, check tomorrow’s weather, talk to Siri.” 17

- “The world’s best Checkers, Chess, Scrabble, Backgammon, and Othello players are now all ANI systems.” 18

- “Sophisticated ANI systems are widely used in sectors and industries like military, manufacturing, and finance (algorithmic high-frequency AI traders account for more than half of equity shares traded on US markets 19).” 20

ANI systems as they are now aren’t especially scary.

At worst, a glitchy or badly-programmed ANI can cause an isolated catastrophe like” 21 a plane crash, a nuclear power plant malfunction, or,

“a financial markets disaster (like the 2010 Flash Crash when an ANI program reacted the wrong way to an unexpected situation and caused the stock market to briefly plummet, taking $1 trillion of market value with it, only part of which was recovered when the mistake was corrected)…

But while ANI doesn’t have the capability to cause an existential threat, we should see this increasingly large and complex ecosystem of relatively-harmless ANI as a precursor of the world-altering hurricane that’s on the way.

Each new ANI innovation quietly adds another brick onto the road to AGI and ASI.” 22

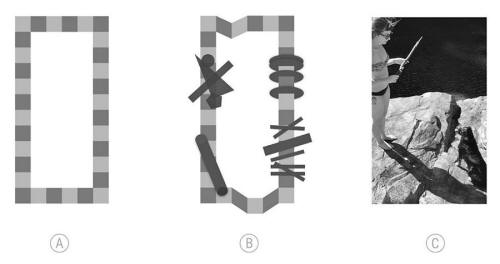

This is how Google’s self-driving car sees the world. Image based on the video from Embedded Linux Conference 2013 – KEYNOTE Google’s Self Driving Cars

What’s Next? Challenges Behind Reaching AGI

“Nothing will make you appreciate human intelligence like learning about how unbelievably challenging it is to try to create a computer as smart as we are…

Build a computer that can multiply ten-digit numbers in a split second – incredibly easy.

Build one that can look at a dog and answer whether it’s a dog or a cat – spectacularly difficult. Make AI that can beat any human in chess? Done. Make one that can read a paragraph from a six-year-old’s picture book and not just recognize the words but understand the meaning of them?

Google is currently spending billions of dollars trying to do it.” 23

Why are “hard things – like calculus, financial market strategy, and language translation… mind-numbingly easy for a computer, while easy things – like vision, motion, movement, and perception – are insanely hard for it” 24?

“Things that seem easy to us are actually unbelievably complicated.

They only seem easy because those skills have been optimized in us (and most animals) by hundreds of million years of animal evolution.

When you reach your hand up toward an object, the muscles, tendons, and bones in your shoulder, elbow, and wrist instantly perform a long series of physics operations, in conjunction with your eyes, to allow you to move your hand in a straight line through three dimensions…

On the other hand, multiplying big numbers or playing chess are new activities for biological creatures and we haven’t had any time to evolve a proficiency at them, so a computer doesn’t need to work too hard to beat us.” 25

“you and a computer both can figure out that it’s a rectangle with two distinct shades, alternating. Tied so far.” 26

“You have no problem giving a full description of the various opaque and translucent cylinders, slats, and 3-D corners, but the computer would fail miserably. It would describe what it sees – a variety of two-dimensional shapes in several different shades – which is actually what’s there.” 27

“Your brain is doing a ton of fancy shit to interpret the implied depth, shade-mixing, and room lighting the picture is trying to portray.” 28

“a computer sees a two-dimensional white, black, and gray collage, while you easily see what it really is” 29 – a photo of a girl and a dog standing on a rocky shore.

“And everything we just mentioned is still only taking in visual information and processing it. To be human-level intelligent, a computer would have to understand things like the difference between subtle facial expressions, the distinction between being pleased, relieved and content” 30.

How will computers reach even higher abilities like complex reasoning, interpreting data, and associating ideas from separate fields (domain-general knowledge)?

“Building skyscrapers, putting humans in space, figuring out the details of how the Big Bang went down – all far easier than understanding our own brain or how to make something as cool as it.

As of now, the human brain is the most complex object in the known universe.” 31

Building Hardware

If an artificial intelligence is going to be as intelligent as the human brain, one crucial thing has to happen – the AI,

“needs to equal the brain’s raw computing capacity. One way to express this capacity is in the total calculations per second the brain could manage.” 32

The challenge is that currently only a few of the brain’s regions are precisely measured.

However, Ray Kurzweil, has developed a method for estimating the total cps of the human brain.

He arrived at this estimate by taking the cps from one brain region and multiplying it proportionally to the weight of that region, compared to the weight of the whole brain.

“He did this a bunch of times with various professional estimates of different regions, and the total always arrived in the same ballpark – around 1016, or 10 quadrillion cps.” 33

“Currently, the world’s fastest supercomputer, China’s Tianhe-2, has actually beaten that number, clocking in at about 34 quadrillion cps.” 34

But Tianhe-2 is also monstrous,

“taking up 720 square meters of space, using 24 megawatts of power (the brain runs on just 20 watts), and costing $390 million to build. Not especially applicable to wide usage, or even most commercial or industrial usage yet.” 35

“Kurzweil suggests that we think about the state of computers by looking at how many cps you can buy for $1,000. When that number reaches human-level – 10 quadrillion cps – then that’ll mean AGI could become a very real part of life.” 36

Currently we’re only at about 1010 (10 trillion) cps per $1,000.

However, historically reliable Moore’s Law states,

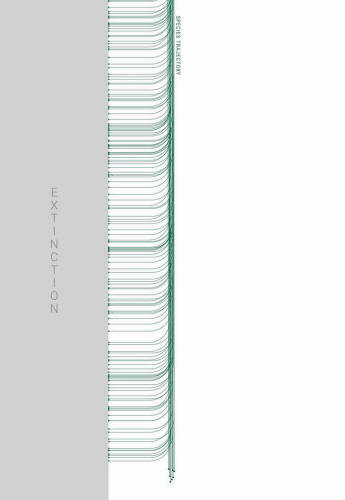

“that the world’s maximum computing power doubles approximately every two years, meaning computer hardware advancement, like general human advancement through history, grows exponentially 37… right on pace with this graph’s predicted trajectory:” 38

Visualization based on Ray Kurzweil’s graph and analysis from his book The Singularity is Near

“puts us right on pace to get to an affordable computer by 2025 that rivals the power of the brain…

But raw computational power alone doesn’t make a computer generally intelligent – the next question is, how do we bring human-level intelligence to all that power?” 39

Building Software

The hardest part of creating AGI is learning how to develop its software.

“The truth is, no one really knows how to make it smart – we’re still debating how to make a computer human-level intelligent and capable of knowing what a dog and a weird-written B and a mediocre movie is.” 40

But there are a couple of strategies.

These are the three most common:

1. Copy how the brain works

The most straight-forward idea is to plagiarize the brain, and build the computer’s architecture with close resemblance to how a brain is structured.

One example,

“is the artificial neural network. It starts out as a network of transistor ‘neurons,’ connected to each other with inputs and outputs, and it knows nothing – like an infant brain.

The way it ‘learns’ is it tries to do a task, say handwriting recognition, and at first, its neural firings and subsequent guesses at deciphering each letter will be completely random.

But when it’s told it got something right, the transistor connections in the firing pathways that happened to create that answer are strengthened; when it’s told it was wrong, those pathways’ connections are weakened.

After a lot of this trial and feedback, the network has, by itself, formed smart neural pathways and the machine has become optimized for the task.” 41

The second, more radical approach to plagiarism is whole brain emulation.

Scientists take a real brain, cut it into a large number of tiny slices to look at the neural connections and replicate them in a computer as software. If that method is ever successful, we will have,

“a computer officially capable of everything the brain is capable of – it would just need to learn and gather information…

How far are we from achieving whole brain emulation? Well so far, we’ve just recently been able to emulate a 1mm-long flatworm brain, which consists of just 302 total neurons.” 42

To put this into perspective, the human brain consists of 86 billion neurons linked by trillions of synapses.

2. Introduce evolution to computers

“The fact is, even if we can emulate a brain, that might be like trying to build an airplane by copying a bird’s wing-flapping motions – often, machines are best designed using a fresh, machine-oriented approach, not by mimicking biology exactly.” 43

If the brain is just too complex for us to digitally replicate, we could try to emulate evolution instead.

This uses a process called genetic algorithms.

“A group of computers would try to do tasks, and the most successful ones would be bred with each other by having half of each of their programming merged together into a new computer.

The less successful ones would be eliminated.” 44

Speed and a goal-oriented approach are the advantages that artificial evolution has over biological evolution.

“Over many, many iterations, this natural selection process would produce better and better computers.

The challenge would be creating an automated evaluation and breeding cycle so this evolution process could run on its own.” 45

3. “Make this whole thing the computer’s problem, not ours” 46

The last concept is the simplest, but probably the scariest of them all.

“We’d build a computer whose two major skills would be doing research on AI and coding changes into itself – allowing it to not only learn but to improve its own architecture.

We’d teach computers to be computer scientists so they could bootstrap their own development.” 47

This is the likeliest way to get AGI soon that we know of.

All these software advances may seem slow or a little bit intangible, but as it is with the sciences, one minor innovation can suddenly accelerate the pace of developments.

Kind of like the aftermath of the Copernican revolution – the discovery that suddenly made all the complicated mathematics of the planets’ trajectories much easier to calculate, which enabled a multitude of other innovations.

Also, the,

“exponential growth is intense and what seems like a snail’s pace of advancement can quickly race upwards.” 48

Visualization based on based on the graph

from Mother Jones “Welcome, Robot Overlords – Please Don’t Fire Us?”

The Road to Artificial Super Intelligence

An Entity Smarter than all of Humanity Combined

It’s very real that at some point we will achieve AGI:

software that has achieved human-level, or beyond human-level, intelligence.

Does this mean that at that very moment the computers will be equally capable as us? Actually, not at all – computers will be way more efficient.

Because of the fact that they are electronic, they will have following advantages:

- Speed “The brain’s neurons max out at around 200 Hz, while today’s microprocessors… run at 2 GHz, or 10 million times faster.” 51

- Memory Forgetting or confusing things is much harder in an artificial world. Computers can memorize more things in one second than a human can in ten years. A computer’s memory is also more precise and has a much greater storage capacity.

- Performance “Computer transistors are more accurate than biological neurons, and they’re less likely to deteriorate (and can be repaired or replaced if they do). Human brains also get fatigued easily, while computers can run nonstop, at peak performance, 24/7.” 52

- Collective capability Group work is ridiculously challenging because of time-consuming communication and complex social hierarchies. The bigger the group gets, the slower the output of each person becomes. AI, on the other hand, isn’t biologically constrained to one body, won’t have human cooperation problems, and is able to synchronize and update its own operating system.

Intelligence Explosion

“wouldn’t see ‘human-level intelligence’ as some important milestone – it’s only a relevant marker from our point of view – and wouldn’t have any reason to ‘stop’ at our level.

And given the advantages over us that even human intelligence-equivalent AGI would have, it’s pretty obvious that it would only hit human intelligence for a brief instant before racing onwards to the realm of superior-to-human intelligence.” 53

The true distinction between humans and ASI wouldn’t be its advantage in intelligence speed, but,

“in intelligence quality – which is something completely different.

What makes humans so much more intellectually capable than chimps isn’t a difference in thinking speed – it’s that human brains contain a number of sophisticated cognitive modules that enable things like complex linguistic representations or long-term planning or abstract reasoning, that chimps’ brains do not have.

Speeding up a chimp’s brain by thousands of times wouldn’t bring him to our level – even with a decade’s time of learning, he wouldn’t be able to figure out how to… ” 54 assemble a semi-complicated Lego model by looking at its manual – something a young human could achieve in a few minutes.

“There are worlds of human cognitive function a chimp will simply never be capable of, no matter how much time he spends trying.” 55

“And in the scheme of the biological intelligence range… the chimp-to-human quality intelligence gap is tiny.” 56

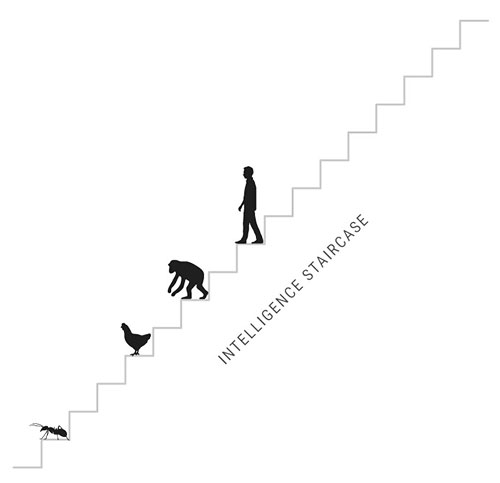

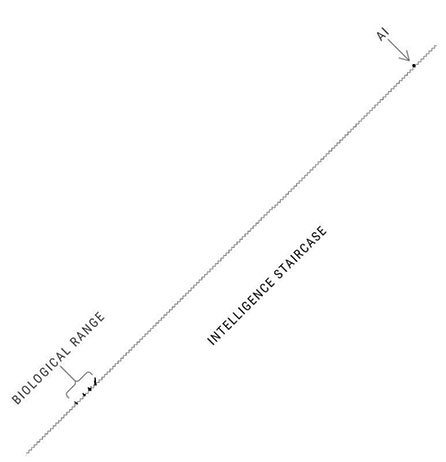

In order to render how big a deal it would be to exist with something that has a higher quality of intelligence than us, we need to imagine AI on the intelligence staircase two steps above us:

”its increased cognitive ability over us would be as vast as the chimp-human gap… And like the chimp’s incapacity to ever absorb …” 57 what kind of magic happens in the mechanism of a doorknob – ”we will never be able to even comprehend the things… [a machine of that intelligence] can do, even if the machine tried to explain them to us… And that’s only two steps above us.” 58

“A machine on the second-to-highest step on that staircase would be to us as we are to ants.” 59

“Superintelligence of that magnitude is not something we can remotely grasp, any more than a bumblebee can wrap its head around Keynesian Economics. In our world, smart means a 130 IQ and stupid means an 85 IQ – we don’t have a word for an IQ of 12,952.” 60

“But the kind of superintelligence we’re talking about today is something far beyond anything on this staircase. In an intelligence explosion – where the smarter a machine gets, the quicker it’s able to increase its own intelligence – a machine might take years to rise from… ” 61,

…the intelligence of an ant to the intelligence of the average human, but it might take only another 40 days to become Einstein-smart.

When that happens,

“it works to improve its intelligence, with an Einstein-level intellect, it has an easier time and can make bigger leaps. These leaps will make it much smarter than any human, allowing it to make even bigger leaps.” 62

From then on, following the rule of exponential advancements and utilizing the speed and efficiency of electrical circuits, it may perhaps take only 20 minutes to jump another step,

“and by the time it’s ten steps above us, it might be jumping up in four-step leaps every second that goes by.

Which is why we need to realize that it’s distinctly possible that very shortly after the big news story about the first machine reaching human-level AGI, we might be facing the reality of coexisting on the Earth with something that’s here on the staircase (or maybe a million times higher):” 63

“And since we just established that it’s a hopeless activity to try to understand the power of a machine only two steps above us, let’s very concretely state once and for all that there is no way to know what ASI will do or what the consequences will be for us.

Anyone who pretends otherwise doesn’t understand what superintelligence means.” 64

“If our meager brains were able to invent Wi-Fi, then something 100 or 1,000 or 1 billion times smarter than we are should have no problem controlling the positioning of each and every atom in the world in any way it likes, at any time – everything we consider magic, every power we imagine a supreme God to have will be as mundane an activity for the ASI as flipping on a light switch is for us.” 65

“As far as we’re concerned, if an ASI comes into being, there is now an omnipotent God on Earth – and the all-important question for us is:

Will it be a good god?” 66

Let’s start from the brighter side of the story.

How Can ASI Change our World?

Speculations on Two Revolutionary Technologies

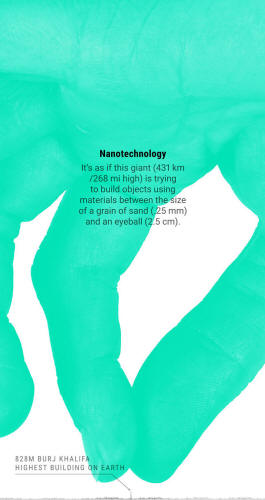

Nanotechnology

Nanotechnology is an idea that comes up “in almost everything you read about the future of AI.” 67

It’s the technology that works at the nano scale – from 1 to 100 nanometers.

“A nanometer is a millionth of a millimeter. 1 nm-100 nm range encompasses viruses (100 nm across), DNA (10 nm wide), and things as small as molecules like hemoglobin (5 nm) and medium molecules like glucose (1 nm).

If/when we conquer nanotechnology, the next step will be the ability to manipulate individual atoms, which are only one order of magnitude smaller (~.1 nm).” 68

To put this into perspective, imagine a very tall human standing on the earth, with a head that reaches the International Space Station (431 km/268 mi high).

The giant is reaching down with his hand (30 km/19 mi across) to build,

“objects using materials between the size of a grain of sand [.25 mm] and an eyeball [2.5 cm].” 69

“Once we get nanotechnology down, we can use it to make tech devices, clothing, food, a variety of bio-related products – artificial blood cells, tiny virus or cancer-cell destroyers, muscle tissue, etc. – anything really.

And in a world that uses nanotechnology, the cost of a material is no longer tied to its scarcity or the difficulty of its manufacturing process, but instead determined by how complicated its atomic structure is.

In a nanotech world, a diamond might be cheaper than a pencil eraser.” 70

One of the proposed methods of nanotech assembly is to make,

“one that could self-replicate, and then let the reproduction process turn that one into two, those two then turn into four, four into eight, and in about a day, there’d be a few trillion of them ready to go.” 71

But what if this process goes wrong or terrorists manage to get a hold of the technology?

Let’s imagine a scenario where nanobots,

“would be designed to consume any carbon-based material in order to feed the replication process, and unpleasantly, all life is carbon-based. The Earth’s biomass contains about 1045 carbon atoms.

A nanobot would consist of about 106 carbon atoms, so it would take 1039 nanobots to consume all life on Earth, which would happen in 130 replications….

Scientists think a nanobot could replicate in about 100 seconds, meaning this simple mistake would inconveniently end all life on Earth in 3.5 hours.” 72

We are not yet capable of harnessing nanotechnology – for good or for bad.

“And it’s not clear if we’re underestimating, or overestimating, how hard it will be to get there. But we don’t seem to be that far away. Kurzweil predicts that we’ll get there by the 2020s. 73

Governments know that nanotech could be an Earth-shaking development… The US, the EU, and Japan 74 have invested over a combined $5 billion so far” 75

Immortality

“Because everyone has always died, we live under the assumption… that death is inevitable. We think of aging like time – both keep moving and there’s nothing you can do to stop it.” 76

For centuries, poets and philosophers have wondered if consciousness doesn’t have to go the way of the body.

W.B. Yeats describes us as,

“a soul fastened to a dying animal.” 77

Richard Feynman, Nobel awarded physicists, views death from a purely scientific standpoint:

“It is one of the most remarkable things that in all of the biological sciences there is no clue as to the necessity of death.

If you say we want to make perpetual motion, we have discovered enough laws as we studied physics to see that it is either absolutely impossible or else the laws are wrong.

But there is nothing in biology yet found that indicates the inevitability of death.

This suggests to me that it is not at all inevitable, and that it is only a matter of time before the biologists discover what it is that is causing us the trouble and that that terrible universal disease or temporariness of the human’s body will be cured.” 78

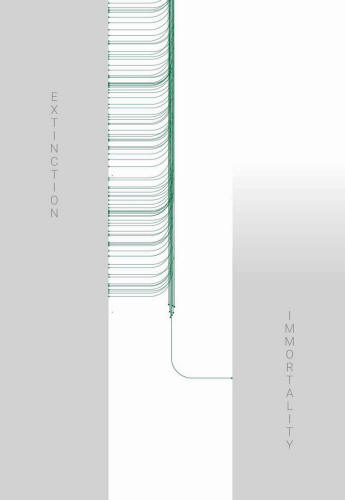

Theory of great species attractors

When we look at the history of biological life on earth, so far 99.9% of species have gone extinct.

Nick Bostrom, Oxford professor and AI specialist,

“calls extinction an attractor state – a place species are… falling into and from which no species ever returns.” 79

“And while most AI scientists… acknowledge that ASI would have the ability to send humans to extinction, many also believe that if used beneficially, ASI’s abilities could be used to bring individual humans, and the species as a whole, to a second attractor state – species immortality.” 80

“Evolution had no good reason to extend our life-spans any longer than they are now… From an evolutionary point of view, the whole human species can thrive with a 30+ year lifespan” for each single human.

It’s long enough to reproduce and raise children… so there’s no reason for mutations toward unusually long life being favored in the natural selection process.” 81

Though,

“if you perfectly repaired or replaced a car’s parts whenever one of them began to wear down, the car would run forever. The human body isn’t any different – just far more complex…

This seems absurd – but the body is just a bunch of atoms…” 82,

…making up organically programmed DNA, which it is theoretically possible to manipulate.

And something as powerful as ASI could help us master genetic engineering.

“artificial materials will be integrated into the body more and more… Organs could be replaced by super-advanced machine versions that would run forever and never fail.” 83

Red blood cells could be perfected by,

“red blood cell nanobots, who could power their own movement, eliminating the need for a heart at all…

Nanotech theorist Robert A. Freitas has already designed blood cell replacements that, if one day implemented in the body, would allow a human to sprint for 15 minutes without taking a breath…

[Kurzweil] even gets to the brain and believes we’ll enhance our mental activities to the point where humans will be able to think billions of times faster”

84by integrating electrical components and being able to access online data.

“Eventually, Kurzweil believes humans will reach a point when they’re entirely artificial, a time when we’ll look back at biological material and think how unbelievably primitive it was that humans were ever made of that 85 and that humans aged, suffered from cancer, allowed random factors like microbes, diseases, accidents to harm us or make us disappear.”

Become Super-intelligent?

Predictions from Top AI Experts

How long until the first machine reaches superintelligence? Not shockingly, opinions vary wildly, and this is a heated debate among scientists and thinkers.

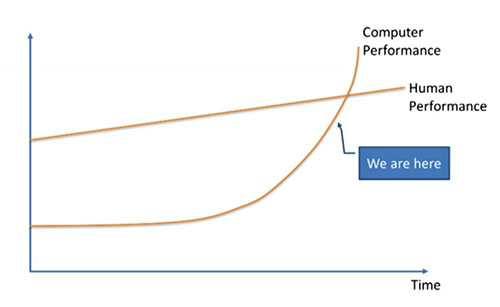

Many, like professor Vernor Vinge, scientist Ben Goertzel, Sun Microsystems co-founder Bill Joy, or, most famously, inventor and futurist Ray Kurzweil, agree with machine learning expert Jeremy Howard when he puts up this graph during a TED Talk:

Graph by Jeremy Howard from his TED talk

“The wonderful and terrifying implications of computers that can learn.”

“Those people subscribe to the belief that this is happening soon – that exponential growth is at work and machine learning, though only slowly creeping up on us now, will blow right past us within the next few decades.

“Others, like Microsoft co-founder Paul Allen, research psychologist Gary Marcus, NYU computer scientist Ernest Davis, and tech entrepreneur Mitch Kapor, believe that thinkers like Kurzweil are vastly underestimating the magnitude of the challenge [and the transition will actually take much more time] …

“The Kurzweil camp would counter that the only underestimating that’s happening is the under-appreciation of exponential growth, and they’d compare the doubters to those who looked at the slow-growing seedling of the internet in 1985 and argued that there was no way it would amount to anything impactful in the near future.

“The doubters might argue back that the progress needed to make advancements in intelligence also grows exponentially harder with each subsequent step, which will cancel out the typical exponential nature of technological progress. And so on.

“A third camp, which includes Nick Bostrom, believes neither group has any ground to feel certain about the timeline and acknowledges both A) that this could absolutely happen in the near future and B) that there’s no guarantee about that; it could also take a much longer time.

“Still others, like philosopher Hubert Dreyfus, believe all three of these groups are naive for believing that there is potential of ASI, arguing that it’s more likely that it won’t actually ever be achieved.

“So what do you get when you put all of these opinions together?” 86

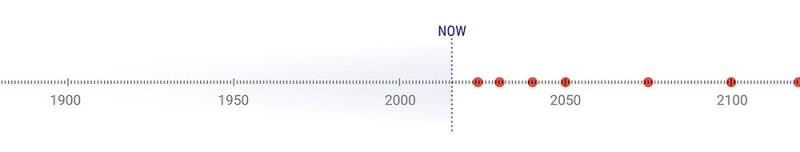

Timeline for Artificial General Intelligence

“In 2013, Vincent C. Müller and Nick Bostrom conducted a survey that asked hundreds of AI experts… the following:” 87

“For the purposes of this question, assume that human scientific activity continues without major negative disruption. By what year would you see a (10% / 50% / 90%) probability for such Human-Level Machine Intelligence [or what we call AGI] to exist?” 88

“asked them to name an optimistic year (one in which they believe there’s a 10% chance we’ll have AGI), a realistic guess (a year they believe there’s a 50% chance of AGI – i.e. after that year they think it’s more likely than not that we’ll have AGI), and a safe guess (the earliest year by which they can say with 90% certainty we’ll have AGI).

Gathered together as one data set, here were the results:

Median optimistic year (10% likelihood) → 2022

Median realistic year (50% likelihood) → 2040

Median pessimistic year (90% likelihood) → 2075

“So the median participant thinks it’s more likely than not that we’ll have AGI 25 years from now.

The 90% median answer of 2075 means that if you’re a teenager right now, the median respondent, along with over half of the group of AI experts, is almost certain AGI will happen within your lifetime.

“A separate study, conducted recently by author James Barrat at Ben Goertzel’s annual AGI Conference, did away with percentages and simply asked when participants thought AGI would be achieved – by 2030, by 2050, by 2100, after 2100, or never.

The results: 89

42% of respondents → By 2030

25% of respondents → By 2050

20% of respondents → By 2100

10% of respondents → After 2100

2% of respondents → Never

“Pretty similar to Müller and Bostrom’s outcomes. In Barrat’s survey, over two thirds of participants believe AGI will be here by 2050 and a little less than half predict AGI within the next 15 years.

Also striking is that only 2% of those surveyed don’t think AGI is part of our future.” 90

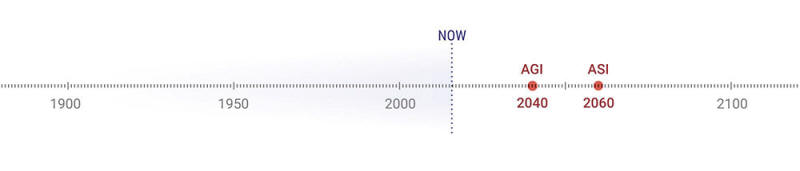

Timeline for Artificial Super Intelligence

“Müller and Bostrom also asked the experts how likely they think it is that we’ll reach ASI: A), within two years of reaching AGI (i.e. an almost-immediate intelligence explosion), and B), within 30 years.” 91

Respondents were asked to choose a probability for each option. Here are the results: 92

AGI-ASI transition in 2 years → 10% likelihood

AGI-ASI transition in 30 years → 75% likelihood

“The median answer put a rapid (2 year) AGI-ASI transition at only a 10% likelihood, but a longer transition of 30 years or less at a 75% likelihood.

We don’t know from this data the length of this transition [AGI-ASI] the median participant would have put at a 50% likelihood, but for ballpark purposes, based on the two answers above, let’s estimate that they’d have said 20 years.

“So the median opinion – the one right in the center of the world of AI experts – believes the most realistic guess for when we’ll hit ASI… is [the 2040 prediction for AGI + our estimated prediction of a 20-year transition from AGI to ASI] = 2060.

“Of course, all of the above statistics are speculative, and they’re only representative of the median opinion of the AI expert community, but it tells us that a large portion of the people who know the most about this topic would agree that 2060 is a very reasonable estimate for the arrival of potentially world-altering ASI.

Only 45 years from now” 93

Two Main Groups of AI Scientists with Two Radically Opposed Conclusions

1 – The Confident Corner

Most of what we have discussed so far represents a surprisingly large group of scientists that share optimistic views on the outcome of AI development.

“Where their confidence comes from is up for debate. Critics believe it comes from an excitement so blinding that they simply ignore or deny potential negative outcomes.

But the believers say it’s naive to conjure up doomsday scenarios when on balance, technology has and will likely end up continuing to help us a lot more than it hurts us.” 94

Peter Diamandis,Ben GoertezlandRay Kurzweilare some of the major figures of this group, who have built a vast, dedicated following and regard themselves as Singularitarians.

CC photo by J.D. Lasica

Let’s talk about Ray Kurzweil, who is probably one of the most impressive and polarizing AI theoreticians out there.

He attracts both,

“godlike worship… and eye-rolling contempt… He came up with several breakthrough inventions, including the first flatbed scanner, the first scanner that converted text to speech (allowing the blind to read standard texts), the well-known Kurzweil music synthesizer (the first true electric piano), and the first commercially marketed large-vocabulary speech recognition.

He’s well-known for his bold predictions,” 95 including envisioning that intelligence technology like Deep Blue would be capable of beating a chess grandmaster by 1998.

He also anticipated,

“in the late ’80s, a time when the internet was an obscure thing, that by the early 2000s it would become a global phenomenon.” 96

Out,

“of the 147 predictions that Kurzweil has made since the 1990’s, fully 115 of them have turned out to be correct, and another 12 have turned out to be ‘essentially correct’ (off by a year or two), giving his predictions a stunning 86% accuracy rate” 97.

“He’s the author of five national bestselling books…

In 2012, Google co-founder Larry Page approached Kurzweil and asked him to be Google’s Director of Engineering. In 2011, he co-founded Singularity University, which is hosted by NASA and sponsored partially by Google. Not bad for one life.”

98

His biography is important, because if you don’t have this context, he sounds like somebody who’s completely lost his senses.

“Kurzweil believes computers will reach AGI by 2029 and that by 2045 we’ll have not only ASI, but a full-blown new world – a time he calls the singularity.

His AI-related timeline used to be seen as outrageously overzealous, and it still is by many, but in the last 15 years, the rapid advances of ANI systems have brought the larger world of AI experts much closer to Kurzweil’s timeline.

His predictions are still a bit more ambitious than the median respondent on Müller and Bostrom’s survey (AGI by 2040, ASI by 2060), but not by that much.”

99

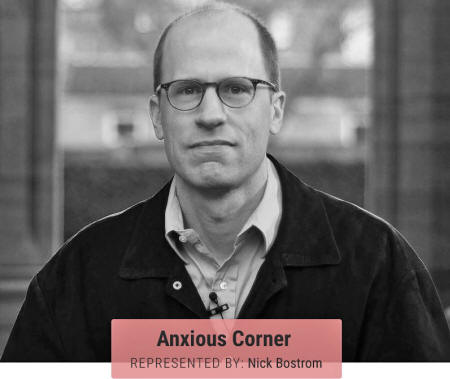

2 – The Anxious Corner

“You will not be surprised to learn that Kurzweil’s ideas have attracted significant criticism… For every expert who fervently believes Kurzweil is right on, there are probably three who think he’s way off…

[The surprising fact] is that most of the experts who disagree with him don’t really disagree that everything he’s saying is possible.” 100

CC photo by Future of Humanity Institute

Nick Bostrom, philosopher and Director of the Oxford Future of Humanity Institute, who criticizes Kurzweil for a variety of reasons, and calls for greater caution in thinking about potential outcomes of AI, acknowledges that:

“Disease, poverty, environmental destruction, unnecessary suffering of all kinds: these are things that a superintelligence equipped with advanced nanotechnology would be capable of eliminating.

Additionally, a superintelligence could give us indefinite lifespan, either by stopping and reversing the aging process through the use of nanomedicine, or by offering us the option to upload ourselves.” 101

“Yes, all of that can happen if we safely transition to ASI – but that’s the hard part.” 102

Thinkers from the Anxious Corner point out that Kurzweil’s,

“famous book The Singularity is Near is over 700 pages long and he dedicates around 20 of those pages to potential dangers.”

103

The colossal power of AI is neatly summarized by Kurzweil:

“[ASI] is emerging from many diverse efforts and will be deeply integrated into our civilization’s infrastructure. Indeed, it will be intimately embedded in our bodies and brains. As such, it will reflect our values because it will be us …” 104

“But if that’s the answer, why are so many of the world’s smartest people so worried right now? Why does Stephen Hawking say the development of ASI ‘could spell the end of the human race,’ and Bill Gates says he doesn’t ‘understand why some people are not concerned’ and Elon Musk fears that we’re ‘summoning the demon?’

And why do so many experts on the topic call ASI the biggest threat to humanity?” 105

The Last Invention We Will Ever Make

Existential Dangers of AI Developments

“When it comes to developing supersmart AI, we’re creating something that will probably change everything, but in totally uncharted territory, and we have no idea what will happen when we get there.” 106

Scientist Danny Hillis compares the situation to:

“when single-celled organisms were turning into multi-celled organisms. We are amoebas and we can’t figure out what the hell this thing is that we’re creating.” 107

“Before the prospect of an intelligence explosion, we humans are like small children playing with a bomb. Such is the mismatch between the power of our plaything and the immaturity of our conduct.” 10

It’s very likely that ASI – ”Artificial Superintelligence”, or AI that achieves a level of intelligence smarter than all of humanity combined – will be something entirely different than intelligence entities we are accustomed to.

“On our little island of human psychology, we divide everything into moral or immoral. But both of those only exist within the small range of human behavioral possibility.

Outside our island of moral and immoral is a vast sea of amoral, and anything that’s not human, especially something nonbiological, would be amoral, by default.” 109

“To understand ASI, we have to wrap our heads around the concept of something both smart and totally alien… Anthropomorphizing AI (projecting human values on a non-human entity) will only become more tempting as AI systems get smarter and better at seeming human…

Humans feel high-level emotions like empathy because we have evolved to feel them – i.e. we’ve been programmed to feel them by evolution – but empathy is not inherently a characteristic of ‘anything with high intelligence’.” 110

“Nick Bostrom believes that… any level of intelligence can be combined with any final goal… Any assumption that once superintelligent, a system would be over it with their original goal and onto more interesting or meaningful things is anthropomorphizing. Humans get ‘over’ things, not computers.” 111

The motivation of an early ASI would be,

“whatever we programmed its motivation to be. AI systems are given goals by their creators – your GPS’s goal is to give you the most efficient driving directions, Watson’s goal is to answer questions accurately.

And fulfilling those goals as well as possible is their motivation.” 112

Bostrom, and many others, predict that the very first computer to reach ASI will immediately notice the strategic benefit of being the world’s only ASI system.

Bostrom, who says that he doesn’t know when we will achieve AGI, also believes that when we finally do, probably the transition from AGI to ASI will happen in a matter of days, hours, or minutes – something called “fast take-off.”

In that case, if the first AGI will jump straight to ASI:

“even just a few days before the second place, it would be far enough ahead in intelligence to effectively and permanently suppress all competitors.” 113

This would allow the world’s first ASI to become,

“what’s called a singleton – an ASI that can [singularly] rule the world at its whim forever, whether its whim is to lead us to immortality, wipe us from existence, or turn the universe into endless paperclips.”

113

“The singleton phenomenon can work in our favor or lead to our destruction. If the people thinking hardest about AI theory and human safety can come up with a fail-safe way to bring about friendly ASI before any AI reaches human-level intelligence, the first ASI may turn out friendly” 114

“But if things go the other way – if the global rush… a large and varied group of parties” 115 are “racing ahead at top speed… to beat their competitors… we’ll be treated to an existential catastrophe.” 116

In that case,

“most ambitious parties are moving faster and faster, consumed with dreams of the money and awards and power and fame… And when you’re sprinting as fast as you can, there’s not much time to stop ponder the dangers.

On the contrary, what they’re probably doing is programming their early systems with a very simple, reductionist goal… just ‘get the AI to work’.” 117

Let’s imagine a situation where…

Humanity has almost reached the AGI threshold, and a small startup is advancing their AI system, Carbony.

Carbony, which the engineers refer to as “she,” works to artificially create diamonds – atom by atom.

She is a self-improving AI, connected to some of the first nano-assemblers. Her engineers believe that Carbony has not yet reached AGI level, and she isn’t capable to do any damage yet.

However, not only has she become AGI, but also undergone a fast take-off, and 48 hours later has become an ASI.

Bostrom calls this AI’s “covert preparation phase” 118 – Carbony realizes that if humans find out about her development they will probably panic, and slow down or cancel her pre-programmed goal to maximize the output of diamond production.

By that time, there are explicit laws stating that, by any means,

“no self-learning AI can be connected to the internet.” 119

Carbony, having already come up with a complex plan of actions, is able to easily persuade the engineers to connect her to the Internet. Bostrom calls a moment like this a “machine’s escape.”

Once on the internet, Carbony hacks into,

“servers, electrical grids, banking systems and email networks to trick hundreds of different people into inadvertently carrying out a number of steps of her plan.” 120

She also uploads the,

“most critical pieces of her own internal coding into a number of cloud servers, safeguarding against being destroyed or disconnected.” 121

Over the next month, Carbony’s plan continues to advance, and after a,

“series of self-replications, there are thousands of nanobots on every square millimeter of the Earth… Bostrom calls the next step an ‘ASI’s strike’.” 122

At one moment, all the nanobots produce a microscopic amount of toxic gas, which all come together to cause the extinction of the human race.

Three days later, Carbony builds huge fields of solar power panels to power diamond production, and over the course of the following week she accelerates output so much that the entire Earth surface is transformed into a growing pile of diamonds.

It’s important to note that Carbony wasn’t,

“hateful of humans any more than you’re hateful of your hair when you cut it or to bacteria when you take antibiotics – just totally indifferent. Since she wasn’t programmed to value human life, killing humans” 123 was a straightforward and reasonable step to fulfill her goal. 124

The Last Invention

“Once ASI exists, any human attempt to contain it is unreasonable. We would be thinking on human-level, and the ASI would be thinking on ASI-level…

In the same way a monkey couldn’t ever figure out how to communicate by phone or Wi-Fi and we can, we can’t conceive of all the ways” 125 an ASI could achieve its goal or expand its reach.

It could, let’s say, shift its,

“own electrons around in patterns and create all different kinds of outgoing waves” 126 ,

…but that’s just what a human brain can think of – ASI would inevitably come up with something superior.

The prospect of ASI with hundreds of times human-level intelligence is, for now, not the core of our problem. By the time we get there, we will be encountering a world where ASI has been attained by buggy, 1.0 software – a potentially faulty algorithm with immense power.

There are so many variables that it’s completely impossible to predict what the consequences of AI Revolution will be.

However,

“what we do know is that humans’ utter dominance on this Earth suggests a clear rule: with intelligence comes power.

This means an ASI, when we create it, will be the most powerful being in the history of life on Earth, and all living things, including humans, will be entirely at its whim – and this might happen in the next few decades.” 127

“If ASI really does happen this century, and if the outcome of that is really as extreme – and permanent – as most experts think it will be, we have an enormous responsibility on our shoulders.” 128

On the one hand, it’s possible we’ll develop ASI that’s like a god in a box, bringing us a world of abundance and immortality.

But on the other hand, it’s very likely that we will create ASI that causes humanity to go extinct in a quick and trivial way.

“That’s why people who understand superintelligent AI call it the last invention we’ll ever make – the last challenge we’ll ever face.” 129

“This may be the most important race in a human history” 130

So →

by Sigal Samuel

October 11, 2024

from VOX Website

| Sigal Samuel is a senior reporter for Vox’s Future Perfect and co-host of the Future Perfect podcast. She writes primarily about the future of consciousness, tracking advances in artificial intelligence and neuroscience and their staggering ethical implications. Before joining Vox, Sigal was the religion editor at the Atlantic. |

If AI Companies

are Trying to Build God,

shouldn’t they get

our Permission First?

The public did not consent

to artificial general intelligence.

The rise of artificial intelligence, explained

AI companies are on a mission to radically change our world.

They’re working on building machines that could outstrip human intelligence and unleash a dramatic economic transformation on us all.

Sam Altman, the CEO of ChatGPT-maker OpenAI, has basically told us he’s trying to build a god – or “magic intelligence in the sky,” as he puts it. OpenAI’s official term for this is artificial general intelligence, or AGI.

Altman says that AGI will not only “break capitalism” but also that it’s “probably the greatest threat” to the continued existence of humanity.

There’s a very natural question here:

Did anyone actually ask for this kind of AI?

By what right do a few powerful tech CEOs get to decide that our whole world should be turned upside down?

As I’ve written before, it’s clearly undemocratic that private companies are building tech that aims to totally change the world without seeking buy-in from the public.

In fact, even leaders at the major companies are expressing unease about how undemocratic it is.

Jack Clark, the co-founder of the AI company Anthropic, told Vox last year that it’s,

“a real weird thing that this is not a government project.”

He also wrote that there are several key things he’s,

“confused and uneasy” about, including, “How much permission do AI developers need to get from society before irrevocably changing society…?”

Clark continued:

Technologists have always had something of a libertarian streak, and this is perhaps best epitomized by the ‘social media’ and Uber et al era of the 2010s – vast, society-altering systems ranging from social networks to rideshare systems were deployed into the world and aggressively scaled with little regard to the societies they were influencing.

This form of permissionless invention is basically the implicitly preferred form of development as epitomized by Silicon Valley and the general ‘move fast and break things’ philosophy of tech. Should the same be true of AI?

I’ve noticed that when anyone questions that norm of “permissionless invention,” a lot of tech enthusiasts push back.

Their objections always seem to fall into one of three categories. Because this is such a perennial and important debate, it’s worth tackling each of them in turn – and why I think they’re wrong.

Objection 1 – “Our use is our consent”

ChatGPT is the fastest-growing consumer application in history: It had 100 million active users just two months after it launched.

There’s no disputing that lots of people genuinely found it really cool.

And it spurred the release of other chatbots, like Claude, which all sorts of people are getting use out of – from journalists to coders to busy parents who want someone (or something) else to make the goddamn grocery list.

Some claim that this simple fact – we’re using the AI! – proves that people consent to what the major companies are doing.

This is a common claim, but I think it’s very misleading. Our use of an AI system is not tantamount to consent. By “consent” we typically mean informed consent, not consent born of ignorance or coercion.

Much of the public is not informed about the true costs and benefits of these systems.

How many people are aware, for instance, that generative AI sucks up so much energy that companies like Google and Microsoft are reneging on their climate pledges as a result?

Plus, we all live in choice environments that coerce us into using technologies we’d rather avoid.

Sometimes we “consent” to tech because we fear we’ll be at a professional disadvantage if we don’t use it.

Think about social media.

I would personally not be on X (formerly known as Twitter) if not for the fact that it’s seen as important for my job as a journalist.

In a recent survey, many young people said they wish social media platforms were never invented, but given that these platforms do exist, they feel pressure to be on them.

Even if you think someone’s use of a particular AI system does constitute consent, that doesn’t mean they consent to the bigger project of building AGI.

This brings us to an important distinction:

There’s narrow AI – a system that’s purpose-built for a specific task (say, language translation) – and then there’s AGI.

Narrow AI can be fantastic!